As part of three theses, we develop an artificial intelligence-based fall risk monitoring prototype based on the recording of a person’s daily activities via several sensors (radar, event-based camera, motion capture, pressure sensor mat, classic camera). A capture system using these tools will be set up to acquire the data. In this study, we will look at the problem of classifying the daily movement activities of healthy people with disabilities. Here, we describe the various sensors and associated data used to set up the database.

multimodal.activites.dataset@etis-lab.fr

People:

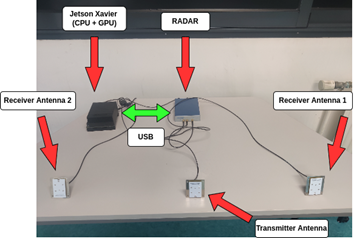

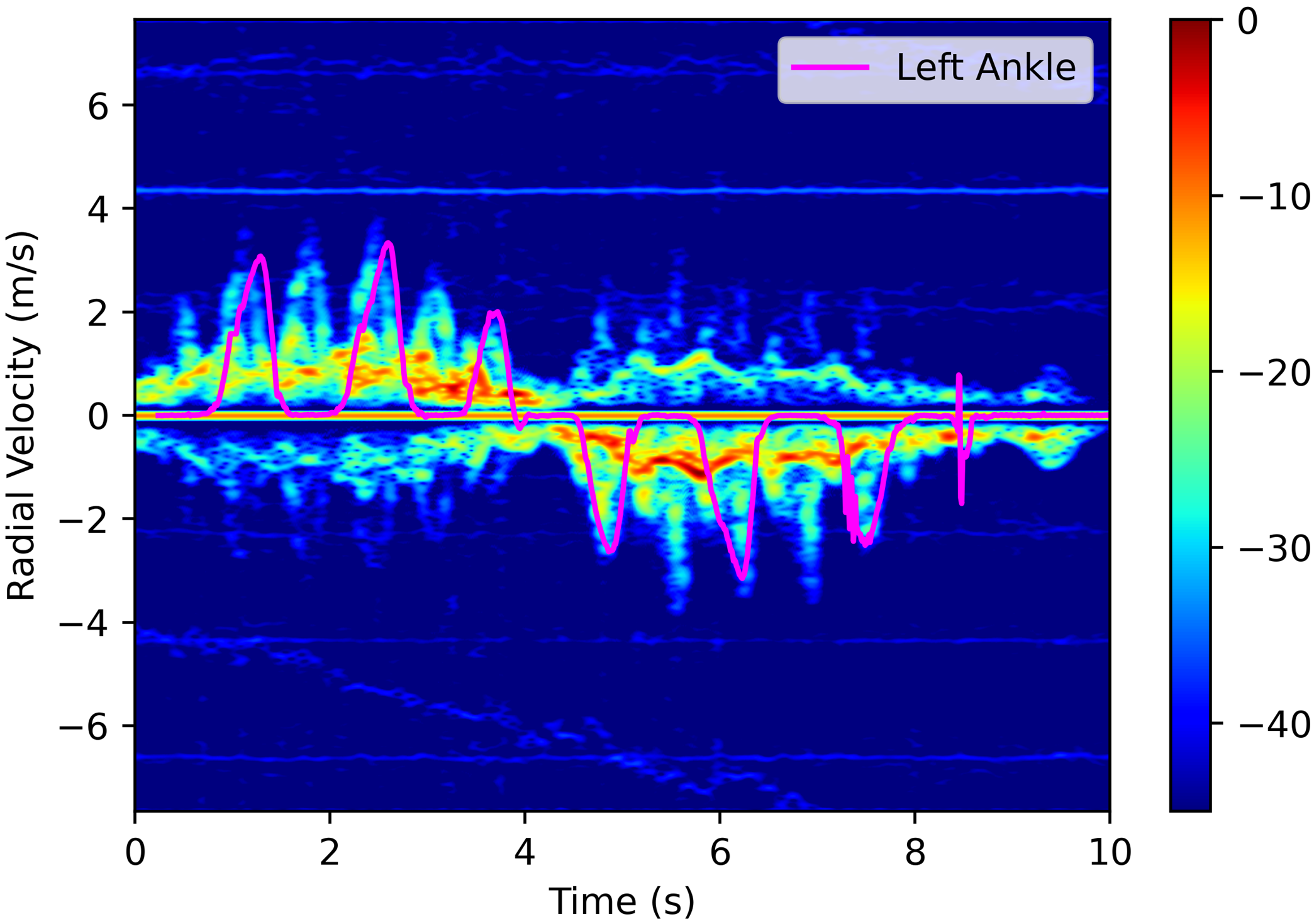

The principle of the radar is to send an electromagnetic wave towards a target (the person) and record the echo of this wave reflected by the target. This reflected wave contains several valuable data, including the radial distance to the radar and the radial velocity to the radar. This data can be useful for recognizing an activity and detecting the fall risk.

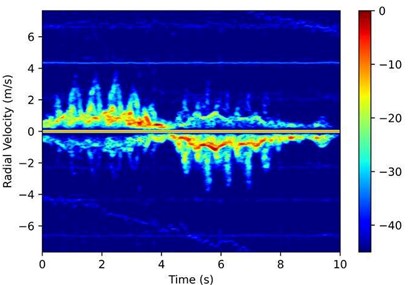

Following the recording of radar data, a series of processing steps is applied to the raw data to obtain a spectrogram.

The spectrogram represents the radial velocity of the target as a function of time, and it is extracted from the raw radar data.

Picture of event-based camera and video explanation of how it works from

https://www.youtube.com/watch?v=kPCZESVfHoQ

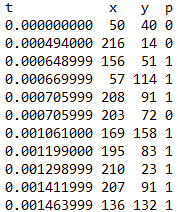

Event-based camera is a recent high-speed acquisition technology. This sensor is made up of independent pixels. Each pixel acquires new data if a change in brightness is detected in its field of view. When a change is detected, the camera records an event consisting of the position (x, y) of the pixel concerned, the timestamp t of the change in brightness, and the polarity p of the change in brightness. This polarity corresponds to whether the brightness is increasing or decreasing. Such a camera eliminates data redundancy as only the motion, which is useful information, is retained. If no motion is detected, no event is recorded. The camera is asynchronous, which means that motion can be characterized without blurring, and with high temporal resolution since there is low latency, unlike classical cameras.

The data acquired by the event-based camera is a succession of pixel coordinates (x, y), timestamps t for changes in brightness, and polarity p. This data is stored in a “.raw” file.

This data can then be transformed into a sequence of images, to obtain a visual representation of the data. If this representation is used, only corpulence can be characterized. As seen in the video, spatial resolution is not sufficient to characterize the face. Furthermore, only polarity is acquired: it will be equal to 1 if the luminosity is higher than previously, or equal to -1 if the luminosity is lower than previously. No color is recorded, so it is not possible to obtain information on colors, such as hair color, for example.

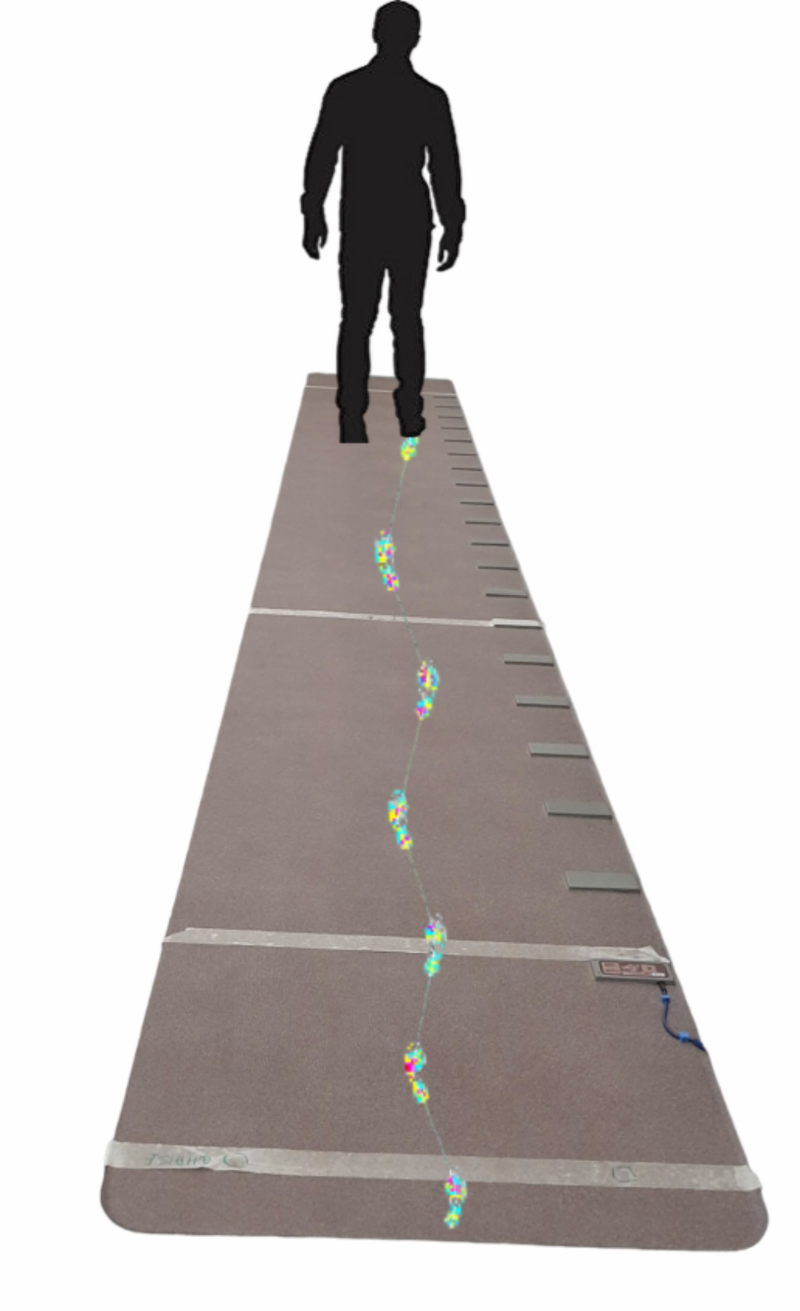

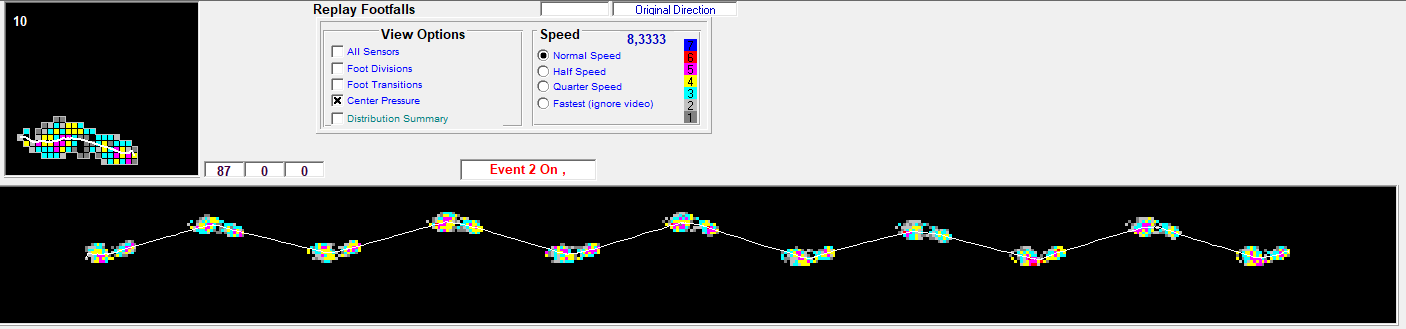

Equipped with resistive pressure sensors, the pressure sensor mat measures the pressure values under each foot and allows for the quantification of spatiotemporal parameters of walking.

The pressure data from each sensor is sampled over time, creating a list of timestamped pressure values for each activated sensor. This data can be useful for recognizing an activity and detecting changes in the fall risk.

Capture from GAITRite software

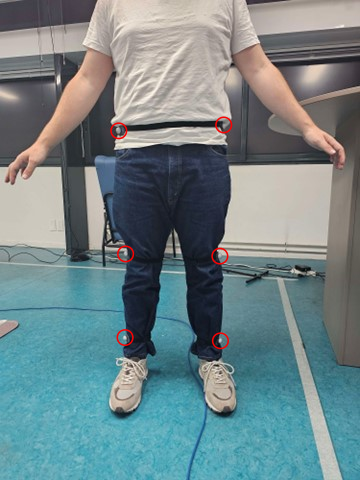

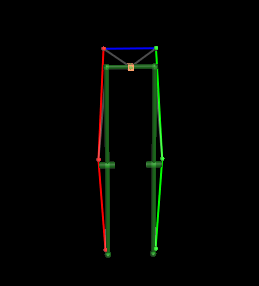

Motion capture (MoCap) is an infrared-based technology. Markers are placed on various body parts. The cameras comprising the motion capture system emit infrared light. The markers reflect this light. The cameras then detect these reflections and record the radial positions of each of the markers. Several infrared cameras are used to deduce the spatial position of the markers over time, thereby reconstructing the skeleton through trilateration.

Markers worn by the participant and their representation in Vicon’s Nexus software

To reconstruct the simplified skeleton, several markers must be worn. The skeleton reconstruction is performed using data from these markers in a 3D space.

Thanks to the positions of the markers in 3D space, we can extract the velocity over time of the marker corresponding to the left ankle, for example. It is therefore possible to correlate the motion capture data with the one collected by the radar. This allows us to compare, verify, and annotate the spectrogram.

This type of camera captures images at regular time intervals in color (RGB).

The classic camera is used for annotating data from the other sensors mentioned earlier. We are only interested in the information about the activity being performed here, which is why this data is anonymized by blurring the face.

ETIS

6 Avenue du Ponceau

95000 Cergy

ENSEA : +33130736610

CY Saint-Martin : +33134256633, +33134257541